Today’s 3D perception systems can be classified into three main categories: Stereoscopic vision, Structured light and Time-of-Flight (including Lidar). Each of these modalities typically requires a different type of sensor, from standard CMOS RGB or monochrome global shutter cameras for the first two, to more specific direct or indirect Time-of-Flight devices for the latter. While an active 3D perception system is a complex architecture with different key components (including an advanced illumination source at least), the sensors are at the heart of the solution’s performance and its main limitation today.

Legacy 3D imaging: the frame-based approach

A common characteristic shared by existing 3D active modalities is that they rely on a) an illumination and b) at least one frame-based sensor. Frame-based digital imaging is the perfect technique for taking pictures of scenic views or recording movies. However, frame-based sensing is optimized neither for machine vision nor for 3D perception. Relying on a frame-based sensor limits the system performance in different key aspects that we summarize below:

- Frame rate: The first limitation is introduced by the frame rate itself. Current 3D systems typically run at 30-60fps, and rarely exceed 100fps, providing a new frame after tens of milliseconds. Best case scenario, this single frame is not useful on its own. Worst case scenario, it can be unreliable. A frame-based depth system requires the acquisition of multiple sequential frames to output a reliable comprehension of a scene. This has a triple effect: slow acquisition of the environment, high data latency, and sensitivity to motion.

- Power: Using a frame-based sensor in a depth system typically requires a lot of power. One way to minimize this draw is to reduce the sensor resolution or framerate, each of which reduces system quality. By nature, a global shutter (or fast-rolling shutter) or ToF sensor requires lots of power – in the range of hundreds of milliwatts – to operate, at a desirable frame rate and resolution. That second limitation is the main reason why the resolution of today’s 3D sensors is typically much lower than standard image sensors. The illumination plays an equally important role in the power consumption required by these legacy 3D systems. Whether in a flood illuminator or a high-density structured light pattern, at least several hundred milliwatts are required to achieve a decent signal-to-noise ratio at system level. Spreading the power over a wide field of illumination does not come for free.

- Algorithmic complexity: A third limitation is the algorithmic complexity and heavy processing footprint required to reconstruct 3D information from accumulated depth frames. This introduces unwanted and unnecessary computational load on the overall system, and the central processing unit itself. In the cases of structured light systems, this often also requires a spatial kernel to identify depth points, further reducing system resolution.

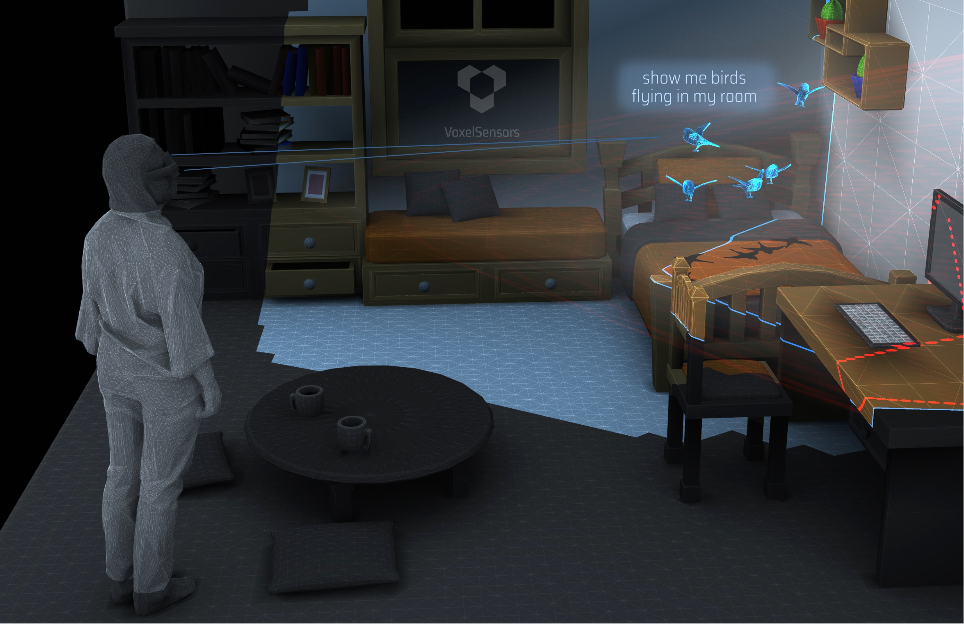

- Interferences: Lastly, an important limitation of existing frame-based 3D sensors is their vulnerability to interferences from the environment or from external sources: they do not work properly under strong ambient light or when other active 3D systems are shining their illumination in the same room.

Concentrating laser power into a single dot

To reduce the power consumption of an active 3D system, the first step is to rethink the active illumination operation. Flood illumination and structured pattern projection spread the optical power across a wide field of illumination, making it highly inefficient. By generating a single dot and using a scanning device (e.g., a mems scanner) to achieve coverage of the scene at high speed, one can design a system with a dramatic reduction of the optical power budget – up to 10x compared to current high-end illumination systems. This greatly reduces system power draw, as well as laser eye-safety concerns. Concentrating available illumination power into a single dot also brings the crucial benefit of high SNR, which is key for outdoor operation.

Active Event Sensor

Active Event Sensor (AES) is a new kind of sensor architecture that provides asynchronous binary detection of events when viewing an active light source or pattern. VoxelSensors‘ AES does not acquire and output frames. Instead, each pixel is smart and generates an event only upon detection of the active light signal. Each position sample requires approximately ten photons on average, with sample rates up to 100MHz. In other words, an active event location in the image plane is obtained up to every 10ns. Key patented technologies implemented in the Single Photon enable ambient light rejection even in bright ambient conditions.