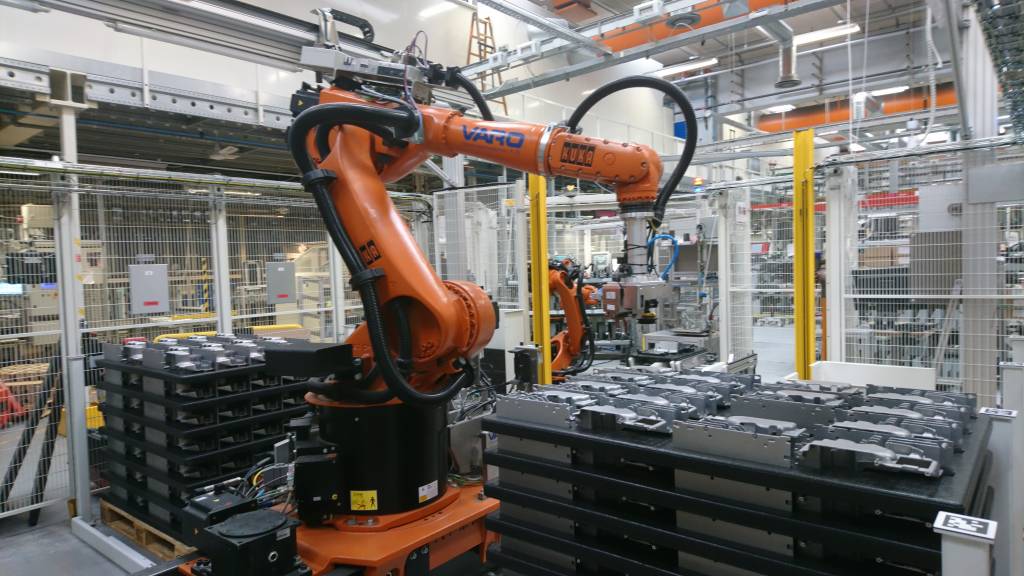

Perception is one of the key technologies required for taking robotic automation a step beyond the classical production lines (e.g. in automotive). For enabling a wider use of robots in industry, logistics and even specialized areas such as lab automation, they must be able to reliably perform complex processes such as pick and place, machine tending or assembly tasks. Smart vision solutions, comprising advanced hardware as well as comprehensive software, are a core element of such applications. Munich-based Roboception offers such 3D vision solutions and has recently introduced a 3D stereo vision platform that not only features leading-edge resolution and accuracy: Thanks to its use of the Nvidia Jetson Orin series, it also runs the provider’s own software as well as any additional software elements a user may want to add – all within this one compact device.

In addition, the company has developed a unique approach to providing the robotic systems with comprehensive ‚visual abilities‘. For a human, the visual perception skill is a powerful competence that is largely based on experience. Replicating this notion of experience in a robotic application is critical especially when objects and their locations are not reliably known, and/or processes are not homogeneous. Classical robot and application teaching procedures become invalid in flexible processes due to the literally infinite variety of possibilities. In addition, users need rapid assessments of feasibilities and, once implementing, are keen avoid tedious data recording which often goes along with system downtime.

The use of model-based synthetic data coupled with real data and machine learning approaches proves to be a highly viable and much more efficient alternative to the resource-intensive and error-prone generation of extensive datasets required for deep learning approaches. This quickly generated, customised training data is tailored to the individual object, task and application requirements and limited only by available computing resources and knowledge of process parameters. Detection templates based on application-specific datasets provide uniquely consistent and reliable recognition results under varying conditions.

Reducing cycling times

Complex pick-and-place tasks, tedious machine tending operations, careful handling of delicate laboratory equipment,… integrators and end users continue to find 3D robot vision to be the solution to their more challenging automation tasks. Users not only appreciate the reduction of set-up time from often weeks to mere days thanks to the system being readily trained prior to its physical installation. They have also observed a reduction in the shop floor space required, indicating a decrease of up to 50%.

In Denmark, Danfoss Drives A/S was struggling with a slow and unreliable kitting cell – until a vision component not only stabilized its operations, but almost halved the time of an individual pick-and-place operation as well as the overall cycle time. At another Danfoss site, integrator Quality Robot Systems (QRS) successfully replaced a manual process of handling a variety of over 100 different components with a robotic solution thanks to vision-enabled detection, grasping and placement of these parts.

In logistics, advanced vision capabilities enable efficient (de-)palletizing and handling of goods. Running e.g. Optical Character Recognition (OCR) in the sensor’s UserSpace enables a seamless identification and sorting of components. Notable improvements reported after the first few months of use include improved cycle times of up to 30% and more, as well as a considerable decrease in overall system costs, with savings amounting to 30% compared to initial projections.

Outlook

In the coming months and years, Roboception will work towards tackling some remaining limitations in 3D vision applications. The team is advancing on an improved handling of mixed known and unknown objects, detecting and localizing even the most difficult objects and materials (e.g. flexible and/or transparent), and processing data needed to support functionalities in the service robotics domain.

www.roboception.com