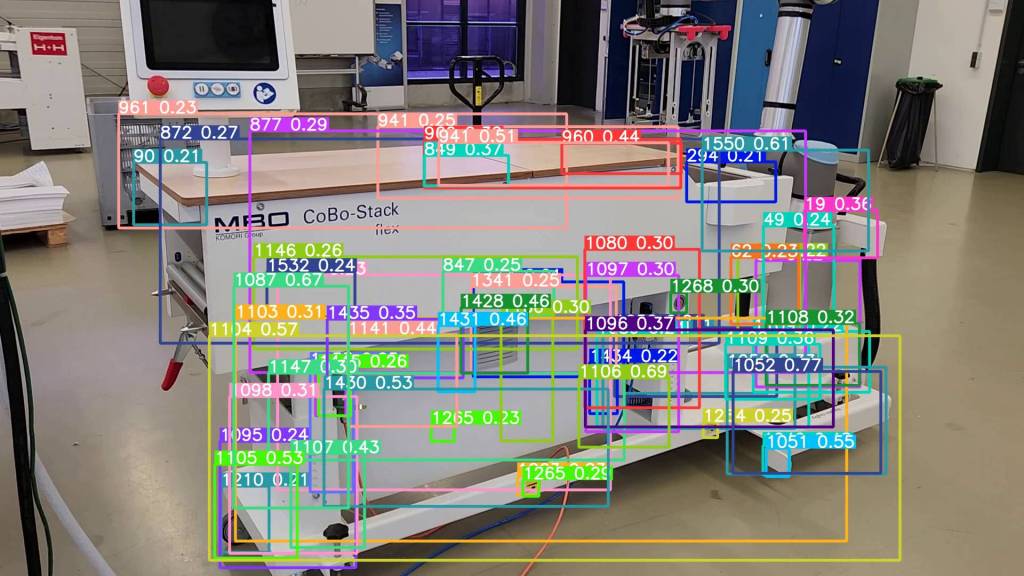

One of the primary challenges in training machine learning models for spare parts identification (object detection) is the requirement for a vast amount of diverse training data. Each spare part necessitates hundreds to thousands of images to capture various angles, lighting conditions, distances, and backgrounds. Additionally, these images need to be meticulously annotated, indicating the exact position of the spare part within each image. Traditionally, this data collection and annotation process is manual, labor-intensive, and expensive. synthavo addresses these challenges through synthetic data generation. By leveraging 3D CAD models of the machines, the company can automatically generate a thorough set of training images. This process includes creating diverse images with variations in angles, lighting, distances, and occlusions, ensuring a robust dataset for training purposes. The key advantage here is the automatic generation of both the images and their annotations.

A Real-world Example

A medium-sized machine manufacturer with 100,000 spare parts would need 100 images per part for training. Annotating each image takes 35 seconds (source: Coco Dataset, TY Lin et al.), totaling around 100,000 hours and costing approximately 1,200,000 Euros at the German minimum wage. This excludes the additional labor of taking the images, making the task impractically large. In contrast, synthetic data generation can generate an even more diverse dataset, including images and annotations, in under 9000 hours with one computing node. Parallelizing across multiple nodes reduces this to several weeks, providing a feasible and cost-effective solution.

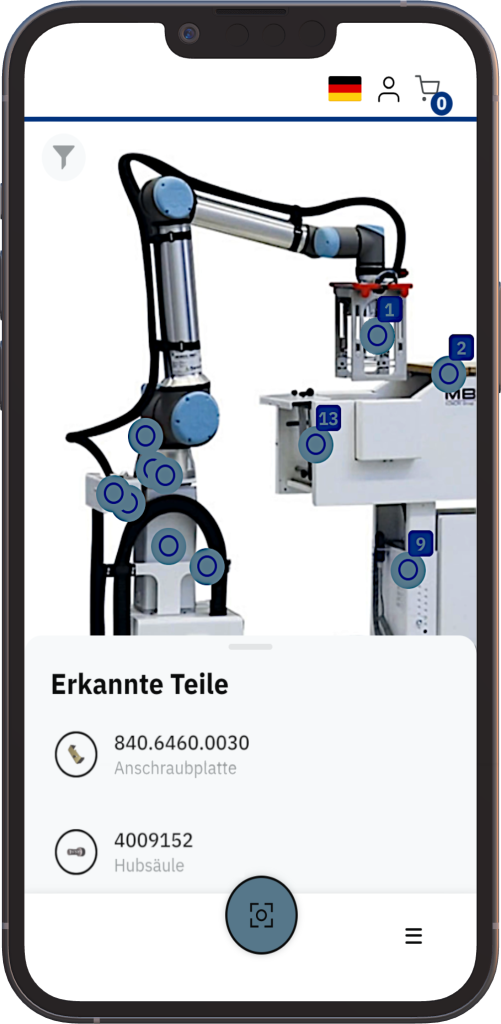

Spare Parts Identification in Installed and Removed States

The trained models identify spare parts in both installed and removed states, improving on traditional methods that struggle with parts in unusual positions. They ensure precise identification, even when parts are heavily soiled, worn, or up to 90 percent obscured. The onboarding process is fully automated. Using 3D CAD data, the system generates training data without manual intervention, enabling one-click training for up to 100,000 spare parts. Data security is maintained by storing only the trained deep learning models in the cloud, not the CAD or 3D data, ensuring proprietary data remains secure.

More Efficiency and Revenue Growth in After Sales

The solution from synthavo offers significant advantages across several key areas. It increases efficiency by up to 74 percent in after-sales service by providing precise and rapid spare parts identification, reducing errors and minimizing machine downtime from 57.5 to 15 hours, for example. Also, it addresses the skills gap in the manufacturing sector by shortening the training period for service technicians, making it easier to onboard new personnel and streamline service operations. The average training period is reduced from 18 to twelve months. OEMs also get a competitive edge in the aftermarket by effectively guiding customers into their sales channels and supporting higher pricing for new machines. On average, the customers of synthavo increase their revenue by up to 12 percent.