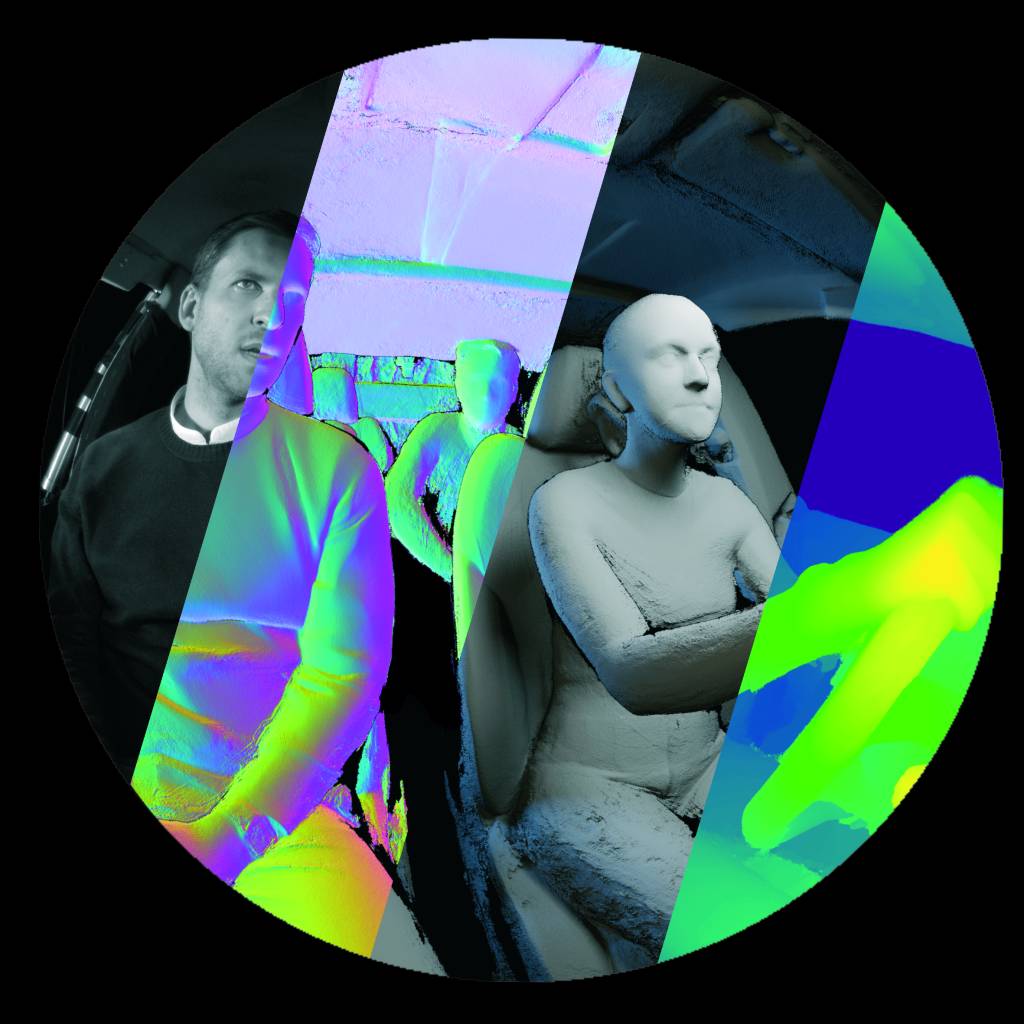

By implementing the solution, computer vision engineers will be able to gather their needed scenes for and with their own devices. rabbitAI’s role is to extract 3D models from real-world image data and derive automated high-precision and pixel-perfect ground truth, such as dense depth for mono and stereo estimation or human body poses. This way the engineers will gain additional data out of their real-world scenarios by augmenting them. It’s a perfect match of real data and synthetic components, all from one single source. This leads to more room for algorithmic optimization, reduced hardware costs, quicker inference times and shorter development cycles.

Four Steps to an Enhanced Development Cycle

Here are some insights on the path to reliable and precise training data in cooperation with rabbitAI. The outcome can be used to reach, for example, Euro NCAP standards or precise detection of body poses and hand gestures in the VR sector:

- 1st step: Device-specific image capture: rabbitAI develops a reference system around the customers target system. This ensures the actual use of production cameras for image capture, while perfectly aligning ground truth from later steps and the actual device-specific camera characteristics.

- 2nd step: 3D ground truth perception: Using real-world image data, 3D models from dynamic scenarios with 1mm resolution at room-scale are extracted. This allows the spatial perception algorithms to be heavily optimized for the individual hardware setup.

- 3rd step: Augmented synthetic scenarios: Building on these high-precision 3D models, photorealistic graphics can be added to existing real images, producing hundreds or thousands of new permutations of training scenarios from a single real-world capture. Every necessary and feasible scenario can therefore be trained at high speed. Additionally, new, non-existent camera views can be synthesized from real-world data facilitate virtual testing for benchmarking or newer generations of hardware.

- 4th step: Automated data pipeline: Requested scenarios are pushed directly and on demand to the customer’s database. In order to provide high-quality data, the fourth step ensures that application errors are recognized, and quality assurance mechanism take effect in the right place.

Proven for In-cabin Sensing and VR Applications

The solution offers ideal ground truth for applications requiring precise recognition of gestures, body posture, or room environments. It has proven particularly successful in two use cases:

- In-cabin sensing: OEMs and Tier 1 suppliers are navigating through stringent functional safety requirements for in-cabin sensing. Meeting these regulations demands rigorous validation, but the challenge lies in efficiently collecting the necessary validation data. The advanced reference data technology enables full coverage of regulated specification on real-world data, yet scalable.

- VR applications: In the fast-paced world of VR equipment, having cutting-edge 3D perception tech is just the start. Achieving more requires expert guidance to accelerate development cycles. Optimizing camera and sensor integration, benchmarking the current own practices against industry standards and receiving tailored solutions for early-stage VR/AR development are included, when training with real-world ground truth.

Faster Data Acquisition by 100x

Wrap up of CEO and co-founder Karsten Krispin „Working with our real-world ground truth solution accelerates data acquisition by 100x. Shorter iterations, utilizing existing devices, improving algorithms, and maximizing the use of already captured scenes – all in collaboration with computer vision engineers – create significant room for new innovations. A benchmark and status check is also possible with us,“ says Karsten. „Anyone who would like to check on their current training data is welcome to get in touch with us.“