Deep learning projects brought new quality, but also new challenges to the machine vision market. Many companies are now trying to answer tricky questions about project management like how to store image datasets in a way that would be both secure and convenient? Which tools to use to prepare training data, like defect annotations? How to exchange these things between team members as well as with the customers or how to keep track of versions of datasets and projects, so that it is always clear which model has been trained with which data? As an answer to all these questions, the Zillin.io web portal has been created. It is an online collaboration platform for teams creating solutions based on deep learning for image analysis. After registration at the platform, you will come directly to the main screen that shows your default workspace with an initially empty list of projects. Here, you can add new projects, datasets and team members.

Role-Based Permissions

Let us start with the team. Zillin has been designed for industrial and medical applications in mind where strict access control is of the highest importance. Thus, for each team member you can define role-based permissions. Initially you are the Owner and you can invite Managers, Developers, Collaborators and Guests. For manual annotation work you can also invite Workers who will have minimal access to your data – only in a batch mode to the current image they should annotate or review. Your customer will most often be a Collaborator – one who can upload datasets and make annotations, but without the need to work on projects. Of course, in the longer run you will have multiple applications or customer projects where different access levels will be required. For that you can use different workspaces. For each workspace you can define a completely new team with completely new set of permissions.

Defining Datasets

When the team is set up, we can start uploading datasets. A dataset is a collection of images. You can import it from your disk or from the cloud using a CSV file. Datasets are central element in deep learning projects. They can be thought of as a folder, but actually there is more to it. Zillin enforces a strict workflow where each dataset can be either a ´draft´ or ´published´. Once it is published it can be used in projects. Once it is used in projects, no more images can be added. This brings some discipline and assures that when two people are talking about one published dataset, they are also always talking about the same set of images. If one realizes there are more interesting images, a new dataset can always be added.

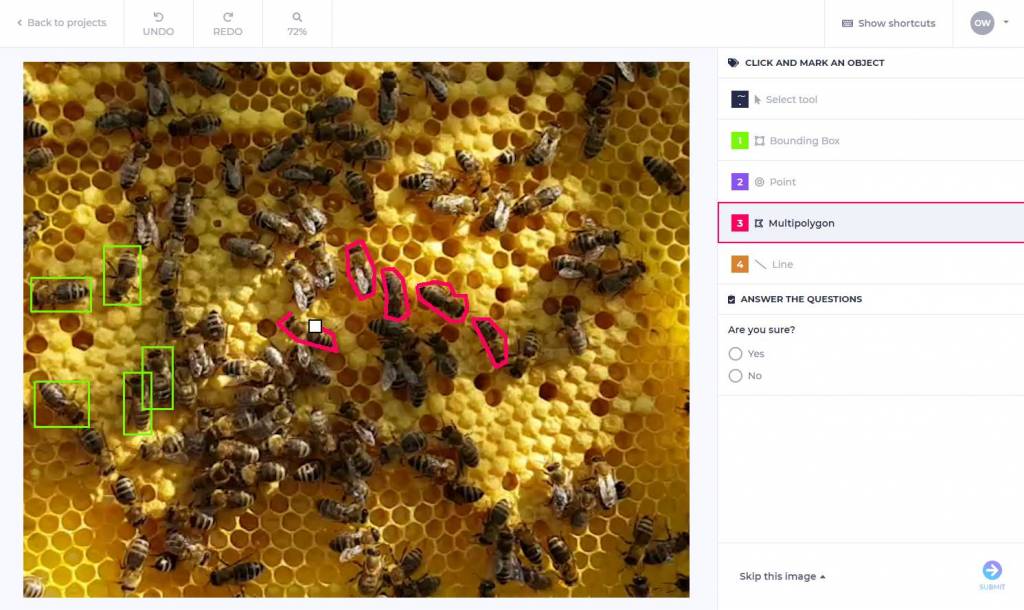

Projects

Then we come to projects which is where we define types of markings and where we use the visual tools to actually annotate objects, features or defects. Currently Zillin supports annotating images with Bounding Boxes, Lines, Points, Polygons and Oriented Rectangles. There are also general questions that can be used for providing additional meta data for an image as a whole – Yes/No, Radio, Checkbox or Open-ended (any text). When a project is ready, Annotators and Reviewers are assigned to process all images from selected datasets and provide annotations. This process by default assumes that one group of people if doing the markings and someone else then reviews the results, applying appropriate corrections or returning images back for repeated annotation. This is especially important for projects where high quality of annotations is required as little mistakes could badly affect the training process of deep learning models.